Mech_Simulation_VR_Gameplay

Gameplay Recording

Abstract

This thesis explores the inverse kinematics, integrating procedural and physics-based animations in VR game development, utilizing OpenXR for VR support and NVIDIA PhysX for physics simulation.

Inspired by mech VR games such as Underdogs by One Hamsa, this study focuses on creating and blending procedural and physics animations within my custom C++ engine, alongside developing a foundational VR game development template for the Windows platform.

-

Game Genre: Mech Simulation VR Game for PC

-

Role: Game Designer and Programmer

-

Team Size: Individual Project

-

Language: C++

-

Engine: My Custom C++ Game Engine "Duality Engine"

UML Class

Diagram

I integrated my custom Skeleton class with the Actor class to generate procedural and physics-based animations in this game.

PhysX is implemented with a fixed update loop, and its simulation event callback interface is inherited by the Game class to handle actor collision detection to support gameplay.

This thesis presents a debugging pipeline that enables running and testing gameplay without wearing a VR headset. This significantly improves efficiency when working on IK controls, procedural animation, and physics interactions. Since most of the development time does not require headset use, this workflow boosts productivity and streamlines iteration.

The flowchart above outlines the application’s runtime logic, which branches based on the success or failure of OpenXR instance creation.

OpenXR Development Framework

In Debug and Release build configurations, the Windows camera is decoupled from the VR headset, allowing alternate gameplay views while the headset renders normally. This requires triple render draw calls, which lowers frame rates but improves development convenience.

In contrast, the Shipping build mirrors the VR view to the PC screen by copying from the OpenXR swapchain to a DirectX 11 2D texture for rendering on Windows, reducing draw calls and improving performance. The flowchart below illustrates these two rendering pipelines.

Build

Configuration-Based Rendering Pipeline

This thesis presents a debugging pipeline that enables running and testing gameplay without wearing a VR headset. This significantly improves efficiency when working on IK controls, procedural animation, and physics interactions. Since most of the development time does not require headset use, this workflow boosts productivity and streamlines iteration.

The flowchart above outlines the application’s runtime logic, which branches based on the success or failure of OpenXR instance creation.

game Control Instruction

To support players sensitive to motion sickness, I implemented snap turning, which instantly rotates the mech by a fixed angle when the right joystick is pushed past a threshold. To avoid repeated snaps, the joystick must return below that threshold before another turn can be triggered.

Other players who do not have motion sickness in VR favored smooth turning. To accommodate both preferences, I added a toggle using the A button. Players can switch between smooth turning for finer control and snap turning for quick directional changes.

Turning modes

MOtion controls

Traditional VR movement, like smooth walking or teleportation, doesn’t work well for mech combat, where attacks rely on active arm motion. To solve this, I designed a system where holding the grip button and moving the controller applies directional force to the mech.

Grabbing one controller allows free movement while the other stays ready for attacks. Holding both grip buttons and moving both controllers in the same direction doubles the movement force, enabling faster traversal. This method reduces motion sickness and supports natural, physical motion control.

Rotation is managed by moving both controllers in opposite directions while holding both grip buttons. The system interprets this as a turning gesture, adjusting the mech orientation based on motion speed.

In Debug and Release build configurations, the Windows camera is decoupled from the VR headset, allowing alternate gameplay views while the headset renders normally. This requires triple render draw calls, which lowers frame rates but improves development convenience.

In contrast, the Shipping build mirrors the VR view to the PC screen by copying from the OpenXR swapchain to a DirectX 11 2D texture for rendering on Windows, reducing draw calls and improving performance. The flowchart below illustrates these two rendering pipelines.

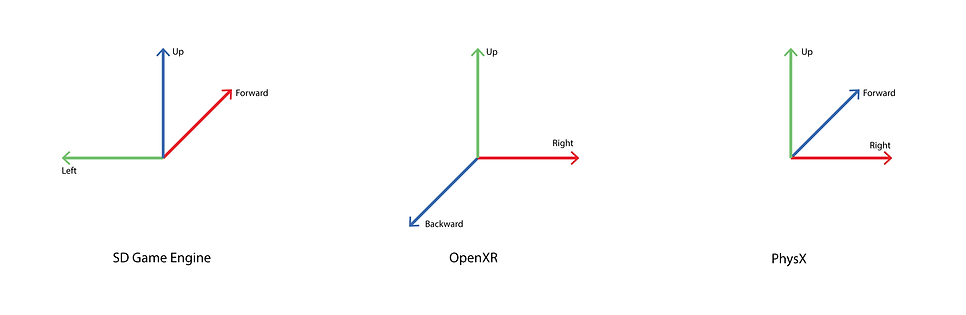

Coordinate Transformation

FACE YOUR ENEMIES

The designs of all three enemies incorporate the element of the eyeball, symbolizing their response to the existence of the Time Gallery and emphasizing the act of watching the exhibition.

As the window to the human mind, these eyes are bound by steel machinery—representing souls and flesh tormented by endless war, forever trapped in the eternal Time Gallery.

The models are sculpted in ZBrush to create high-poly versions, then baked into low-poly models using 3ds Max. I use Blender to rig and animate them, while Substance Painter is used to create reflective metallic surfaces and realistic flesh and blood vein textures.

Control The time

The Chronos’ Clock system integrates UI, power status, health, and items in the first person’s view. In this game, players use the Chronos’ Clock to control time. To know more design details about the Chronos’ Clock system, please see the description shown in the right picture.

|  |  |  |

|---|---|---|---|

|  |  |  |